1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

| import os

import cv2

import dlib

import numpy as np

from collections import OrderedDict

import warnings

warnings.filterwarnings("ignore")

shape_predictor_68_face_landmark = OrderedDict([

('mouth', (48, 68)),

('right_eyebrow', (17, 22)),

('left_eye_brow', (22, 27)),

('right_eye', (36, 42)),

('left_eye', (42, 48)),

('nose', (27, 36)),

('jaw', (0, 17))

])

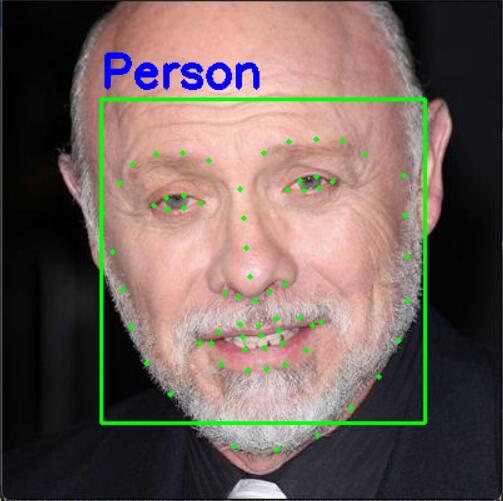

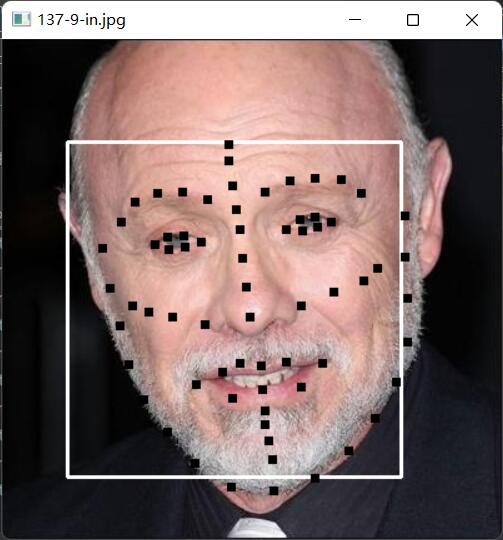

def drawRectangle(detected, frame):

margin = 0.2

img_h, img_w, _ = np.shape(frame)

if len(detected) > 0:

for i, locate in enumerate(detected):

x1, y1, x2, y2, w, h = locate.left(), locate.top(), locate.right() + 1, locate.bottom() + 1, locate.width(), locate.height()

xw1 = max(int(x1 - margin * w), 0)

yw1 = max(int(y1 - margin * h), 0)

xw2 = min(int(x2 + margin * w), img_w - 1)

yw2 = min(int(y2 + margin * h), img_h - 1)

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

face = frame[yw1:yw2 + 1, xw1:xw2 + 1, :]

cv2.putText(frame, 'Person', (locate.left(), locate.top() - 10),

cv2.FONT_HERSHEY_SIMPLEX, 1.2, (255, 0, 0), 3)

return frame

def predict2Np(predict):

dims = np.zeros(shape=(predict.num_parts, 2), dtype=np.int)

length = predict.num_parts

for i in range(0, length):

dims[i] = (predict.part(i).x, predict.part(i).y)

return dims

detector = dlib.get_frontal_face_detector()

criticPoints = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")

def drawCriticPoints(detected, frame):

for (step, locate) in enumerate(detected):

dims = criticPoints(frame, locate)

dims = predict2Np(dims)

for (name, (i, j)) in shape_predictor_68_face_landmark.items():

for (x, y) in dims[i:j]:

cv2.circle(img=frame, center=(x, y),

radius=2, color=(0, 255, 0), thickness=-1)

return frame

def signal_detect(img_path='137-9-in.jpg'):

img = cv2.imread(img_path)

detected = detector(img)

frame = drawRectangle(detected, img)

frame = drawCriticPoints(detected, img)

cv2.imshow('frame', frame)

cv2.waitKey(0)

cv2.destroyAllWindows()

def detect_time():

cap = cv2.VideoCapture(0)

while cap.isOpened():

ret, frame = cap.read()

detected = detector(frame)

frame = drawRectangle(detected, frame)

frame = drawCriticPoints(detected, frame)

cv2.imshow('frame', frame)

key = cv2.waitKey(1)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

print('Pycharm')

signal_detect()

|